Archive - AZ-304: Microsoft Azure Architect Design

Sample Questions

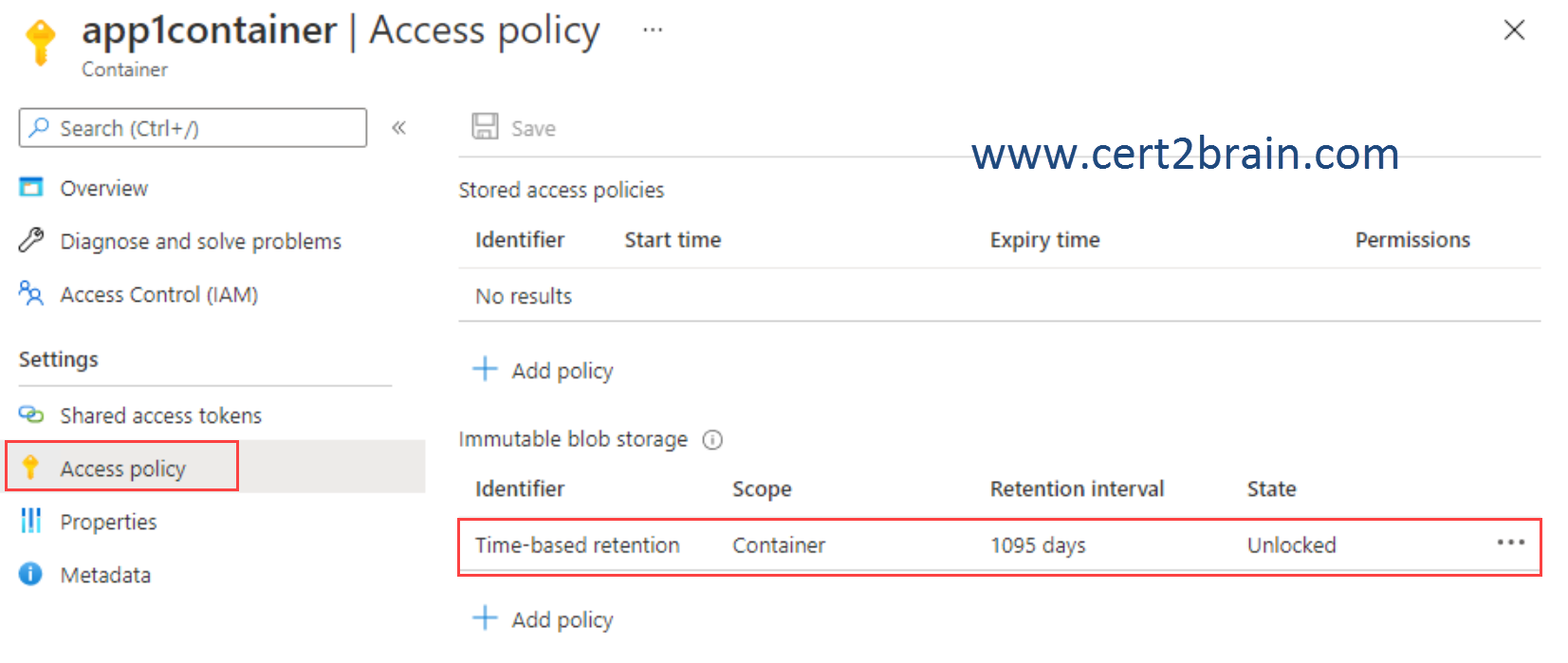

Question: 255

Measured Skill: Design data storage (15-20%)

Note: This questions is based on a case study. The case study is not shown in this demo.

You migrate App1 to Azure.

You need to ensure that the data storage for App1 meets the security and compliance requirements.

What should you do?| A | Create Azure RBAC assignments. |

| B | Create an access policy for the blob service. |

| C | Modify the access level of the blob service. |

| D | Implement Azure resource locks. |

Correct answer: BExplanation:

The Security and Compliance Requirements section contains the following:

Once App1 is migrated to Azure, you must ensure that new data can be written to the app, and the modification of new and existing data is prevented for a period of three years.

We should add a time-based immutable blob storage policy to the blob container's access policy and then lock the policy.

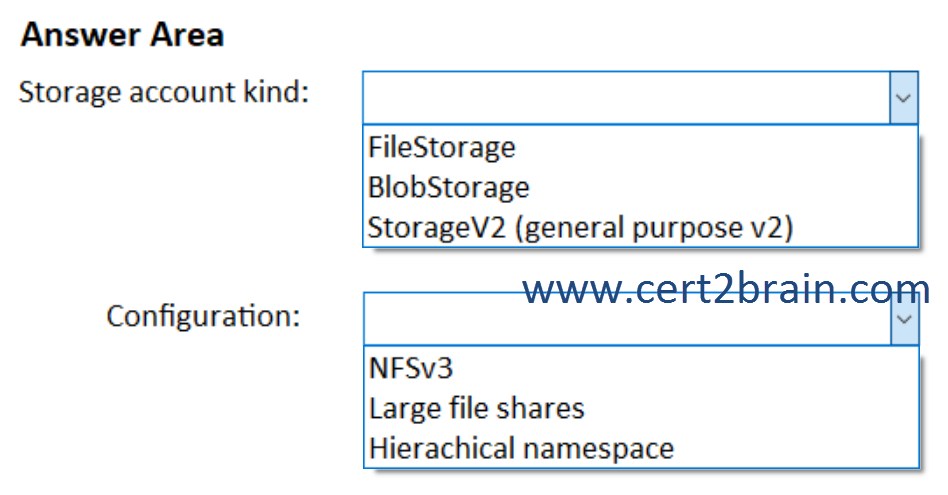

Question: 256

Measured Skill: Design data storage (15-20%)

Note: This questions is based on a case study. The case study is not shown in this demo.

You plan to migrate App1 to Azure.

You need to implement the storage for App1 in Azure. The solution must meet the security and compliance requirements.

What should you use?

(To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.)

| A | Storage account kind: FileStorage

Configuration: NFSv3 |

| B | Storage account kind: FileStorage

Configuration: Large file shares |

| C | Storage account kind: BlobStorage

Configuration: Hierachical namespace |

| D | Storage account kind: BlobStorage

Configuration: NFSv3 |

| E | Storage account kind: StorageV2 (general purpose v2)

Configuration: Large file shares |

| F | Storage account kind: StorageV2 (general purpose v2)

Configuration: Hierachical namespace |

Correct answer: CExplanation:

The Security and Compliance Requirements section contains the following:

Once App1 is migrated to Azure, you must ensure that new data can be written to the app, and the modification of new and existing data is prevented for a period of three years.

From the On-premises Environment section we know, that App1 uses an external storage solution that provides Apache Hadoop-compatible storage.

Hadoop is an open-source software framework for storing data and running applications on clusters of commodity hardware. It provides massive storage for any kind of data, enormous processing power and the ability to handle virtually limitless concurrent tasks or jobs.

Azure Blob Storage provides an Hadoop compatible interface that supports two kinds of blobs, block blobs and page blobs. The Hadoop File System (HDFS) supports a traditional hierarchical file organization. Cloud storage services are focused on object storage that have a flat namespace and extensive metadata instead of file systems that provide a hierarchical namespace. To support compatibility we have to enable hierarchical namespace when creating the BlobStorage account.

Reference: Hadoop Azure Support: Azure Blob Storage

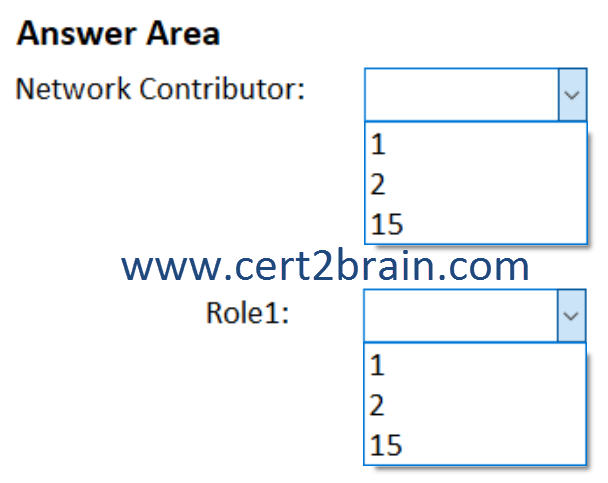

Question: 257

Measured Skill: Design identity and security (25-30%)

Note: This questions is based on a case study. The case study is not shown in this demo.

You need to implement the Azure RBAC role assignments. The solution must meet the authentication and authorization requirements.

How many assignments should you configure for the Network Contributor role and for Role1?

(To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.)

| A | Network Contributor: 1

Role1: 1 |

| B | Network Contributor: 1

Role1: 2 |

| C | Network Contributor: 2

Role1: 2 |

| D | Network Contributor: 15

Role1: 2 |

| E | Network Contributor: 15

Role1: 15 |

| F | Network Contributor: 1

Role1: 15 |

Correct answer: CExplanation:

The Authentication and Authorization Requirements section contains the following:

The Network Contributor built-in RBAC role must be used to grant permission to all the virtual networks in all the Azure subscriptions.

Role1 must be used to assign permissions to the storage accounts of all the Azure subscriptions.

RBAC roles must be applied at the highest level possible.

From the scenario we know that Litware has two Azure tenants. One tenant with 10 subscriptions and one tenant with five subscriptions. We can organize the subscriptions of the two tenants in a management group each and assign users to the Network Contributor role or to Role1 at the management group level.

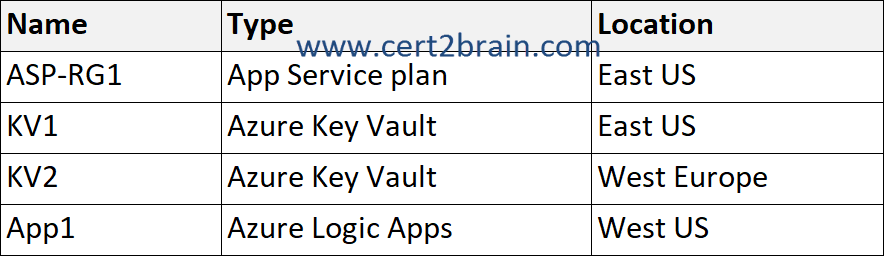

Question: 258

Measured Skill: Design identity and security (25-30%)

You have a resource group named RG1 that contains the objects shown in the following table.

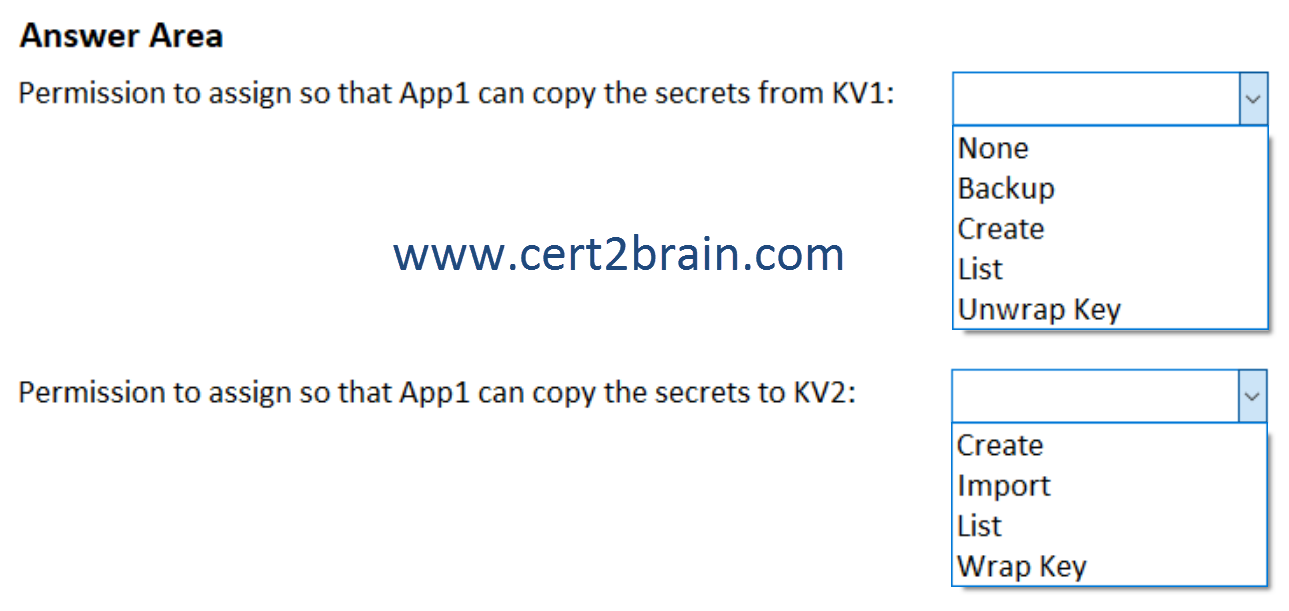

You need to configure permissions so that App1 can copy all the secrets from KV1 to KV2. App1 currently has the Get permission for the secrets in KV1.

Which additional permissions should you assign to App1?

(To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.)

| A | Permission to assign so that App1 can copy the secrets from KV1: None

Permission to assign so that App1 can copy the secrets to KV2: Create |

| B | Permission to assign so that App1 can copy the secrets from KV1: Backup

Permission to assign so that App1 can copy the secrets to KV2: Import |

| C | Permission to assign so that App1 can copy the secrets from KV1: Create

Permission to assign so that App1 can copy the secrets to KV2: List |

| D | Permission to assign so that App1 can copy the secrets from KV1: List

Permission to assign so that App1 can copy the secrets to KV2: Import |

| E | Permission to assign so that App1 can copy the secrets from KV1: Unwrap Key

Permission to assign so that App1 can copy the secrets to KV2: Create |

| F | Permission to assign so that App1 can copy the secrets from KV1: Unwrap Key

Permission to assign so that App1 can copy the secrets to KV2: Wrap Key |

Correct answer: AExplanation:

The Get Secrets permission allows App1 to retrieve the secrets stored in KV1 as plain text. There are no additional permissions required to get the secret.

To ensure that App1 can copy the secrets to KV2, we need to assign the Create Key permission. The Create Key permission creates a new key, stores ist, then returns key parameters and attributes to the client.

If you want to try this in your lab, use the Postman app and the tutorial for REST API calls below.

References:

How To Access Azure Key Vault Secrets Through Rest API Using Postman

Download Postman

Azure Key Vault REST API reference

Question: 259

Measured Skill: Design data storage (15-20%)

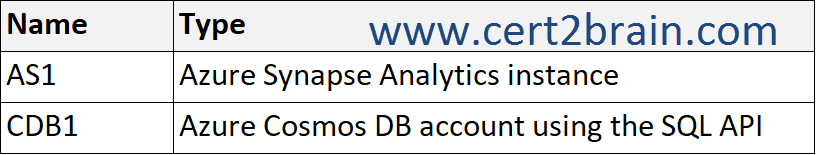

You are an administrator for a company. You have the resources shown in the following table.

CDB1 hosts a container that stores continuously updated operational data.

You are designing a solution that will use AS1 to analyze the operational data daily.

You need to recommend a solution to analyze the data without affecting the performance of the operational data store.

What should you include in the recommendation?| A | Azure Data Factory with Azure Cosmos DB and Azure Synapse Analytics connectors |

| B | Azure Synapse Analytics with PolyBase data loading |

| C | Azure Cosmos DB change feed |

| D | Azure Synapse Link for Azure Cosmos DB |

Correct answer: BExplanation:

Traditional SMP data warehouses use an Extract, Transform, and Load (ETL) process for loading data. Azure SQL pool is a massively parallel processing (MPP) architecture that takes advantage of the scalability and flexibility of compute and storage resources. Using an Extract, Load, and Transform (ELT) process can take advantage of built-in distributed query processing capabilities and eliminate resources needed to transform the data prior to loading.

PolyBase is a technology that loads external data via the T-SQL language using an ELT process.

Extract, Load, and Transform (ELT) is a process by which data is extracted from a source system, loaded into a data warehouse, and then transformed.

The basic steps for implementing a PolyBase ELT for dedicated SQL pool are:

- Extract the source data into text files.

- Land the data into Azure Blob storage or Azure Data Lake Store.

- Prepare the data for loading.

- Load the data into dedicated SQL pool staging tables using PolyBase.

- Transform the data.

- Insert the data into production tables.

Reference: Design a PolyBase data loading strategy for dedicated SQL pool in Azure Synapse Analytics