Microsoft - AZ-305: Designing Microsoft Azure Infrastructure Solutions

Sample Questions

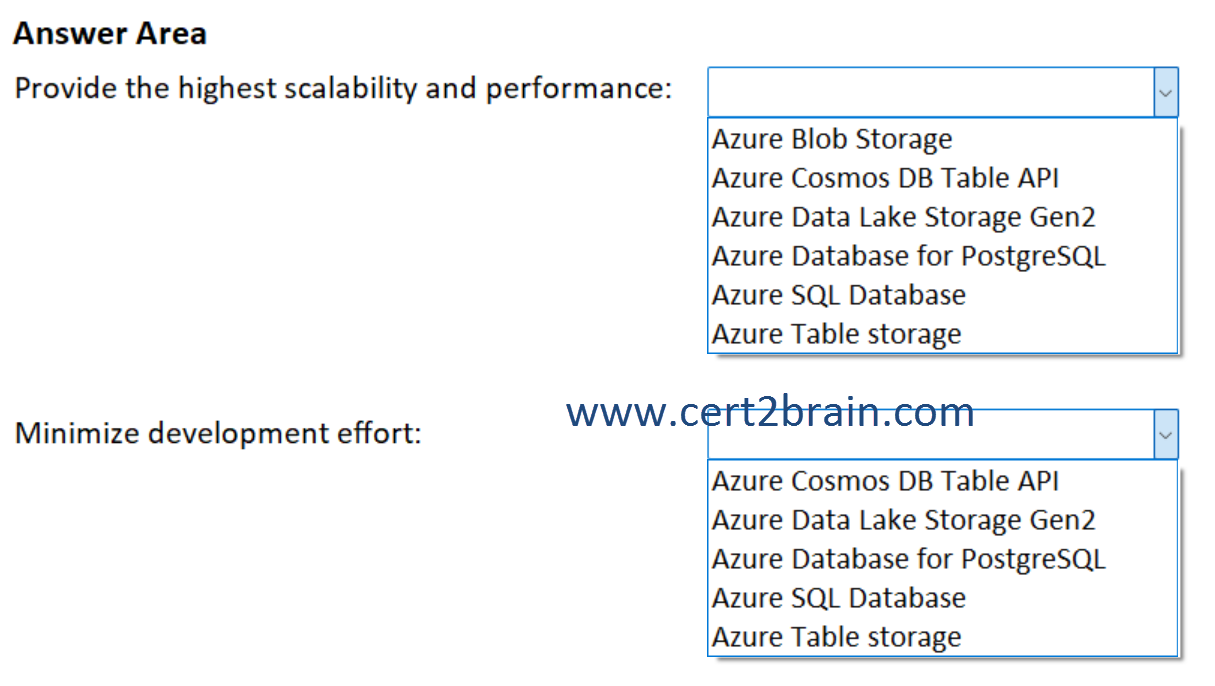

Question: 449

Measured Skill: Design infrastructure solutions (25-30%)

You have an on-premises app named App1 that reads data from a table named Table1, writes new data to Table1, and scans Table1 for aggregate statistics. App1 uses hard-coded partition keys for all read and write operations.

You plan to migrate App1 to a new platform in Azure.

You need to recommend a solution that meets the following requirements:

- Provides the highest scalability and performance.

- Minimizes development effort.

What should you include in the recommendation for each requirement?

(To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.)

| A | Provide the highest scalability and performance: Azure Blob Storage

Minimize development effort: Azure Data Lake Storage Gen2 |

| B | Provide the highest scalability and performance: Azure Cosmos DB Table API

Minimize development effort: Azure Table storage |

| C | Provide the highest scalability and performance: Azure Data Lake Storage Gen2

Minimize development effort: Azure Database for PostgreSQL |

| D | Provide the highest scalability and performance: Azure Database for PostgreSQL

Minimize development effort: Azure Table storage |

| E | Provide the highest scalability and performance: Azure SQL Database

Minimize development effort: Azure Cosmos DB Table API |

| F | Provide the highest scalability and performance: Azure Table storage

Minimize development effort: Azure SQL Database |

Correct answer: BExplanation:

Azure Cosmos DB for Table is a fully managed NoSQL database service that enables you to store, manage, and query large volumes of key-value data using the familiar Azure Table storage APIs. This API is designed for applications that need scalable, high-performance storage for structured/nonrelational data. This API is also compatible with existing Azure Table Storage software development kits (SDKs) and tools.

The API for Table is optimized for storing and retrieving key-value and tabular data. Each table consists of entities (rows) identified by a unique combination of partition key and row key, with flexible properties for each entity. This model is ideal for scenarios such as device registries, user profiles, configuration data, and other applications that require fast lookups and simple queries over large datasets.

Azure Cosmos DB for Table uses a table-like data model, performs reads, writes, and scans, and supports the hard-coded partition keys. We should choose Azure Cosmos DB for Table to meet the "Provide the highest scalability and performance" requirement.

Azure Table storage should be used to meet the "Minimize development effort" requirement. Azure Table storage is a service that stores non-relational structured data (also known as structured NoSQL data) in the cloud, providing a key/attribute store with a schemaless design. Because Table storage is schemaless, it's easy to adapt your data as the needs of your application evolve. Access to Table storage data is fast and cost-effective for many types of applications, and is typically lower in cost than traditional SQL for similar volumes of data.

Azure Table storage also uses a table/partition key model and is highly compatible with existing table-based apps. Azure Table storage often requires little to no code change and is simpler to adopt than Cosmos DB.

References:

What is Azure Cosmos DB for Table?

What is Azure Table storage?

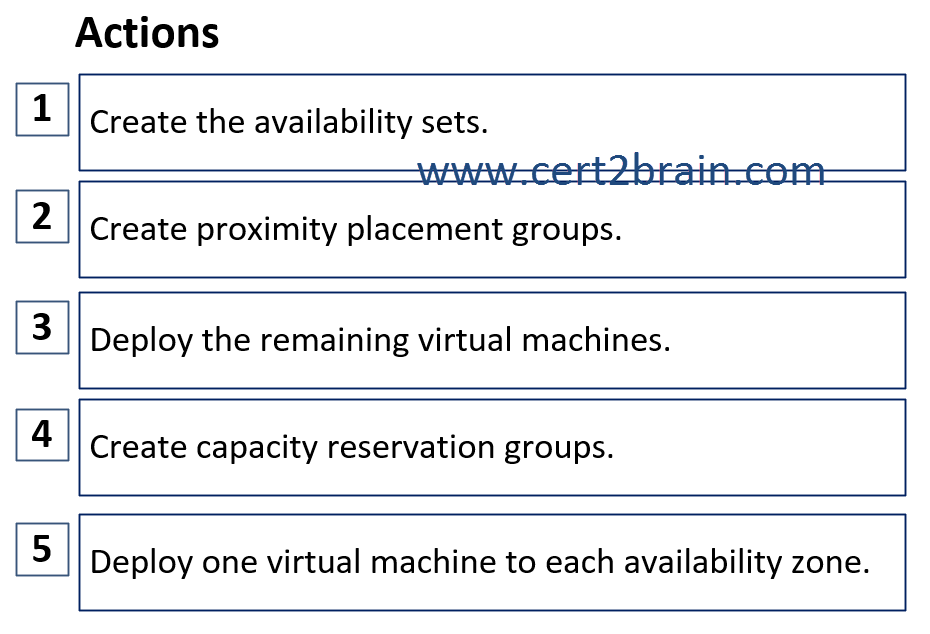

Question: 450

Measured Skill: Design business continuity solutions (10-15%)

You have an Azure subscription.

As part of a highly available deployment, you plan to deploy 30 virtual machines to an Azure region that contains three availability zones.

You need to recommend a deployment solution for the virtual machines, availability sets, and availability zones. The solution must maximize the availability of the deployment.

Which four actions should you recommend be performed in sequence?

(To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order. NOTE: More than one order of answer choices is correct. You will receive credit for any of the correct orders you select.)

| A | Sequence: 2, 1, 5, 3 |

| B | Sequence: 4, 1, 5, 3 |

| C | Sequence: 4, 2, 5, 3 |

| D | Sequence: 2, 5, 1, 3 |

Correct answer: BExplanation:

To maximize availability across three availability zones with 30 VMs, we need to combine availability zones, which provide datacenter-level isolation with availability sets, which provide fault/update domain isolation within a zone.

First, we should create capacity reservation groups. Using capacity reservation groups ensures Azure has enough compute capacity in each availability zone before we deploy a large numbers of VMs. This avoids deployment failures and supports high availability at scale.

Second, we should create the availability sets. Availability sets must exist before the VMs are deployed.

The availability sets are used to distribute the VMs across fault and update domains within each zone.

Third, we deploy one virtual machine to each availability zone to ensure zone-level resilience from the beginning.

Fourth, we evenly distribute the remaining virtual machines into the appropriate availability sets and zones.

Note: Proximity placement groups optimize low latency, not availability. Proximity placement groups can actually reduce fault isolation.

References:

Understanding Availability Sets and Availability Zones

Create a capacity reservation

Proximity placement groups

Question: 451

Measured Skill: Design infrastructure solutions (25-30%)

You have an Azure subscription that contains an Azure Service Bus Premium namespace named Namespace1 and an Azure Event Grid custom topic named Topic1.

You need to route events from Namespace1 to Topic1.

What should you create first in Event Grid?| A | A system topic |

| B | A partner topic |

| C | A message |

| D | A domain |

Correct answer: AExplanation:

To route events from Azure Service Bus to Azure Event Grid, Event Grid needs a system topic that represents the Azure resource emitting events. The system topic acts as the event source that Event Grid can subscribe to. Once the system topic exists, you can then create an event subscription that routes events to Tthe custom Event Grid topic.

A system topic in Event Grid represents one or more events published by Azure services such as Azure Storage and Azure Event Hubs. For example, a system topic can represent all blob events or only blob created and blob deleted events published for a specific storage account. In this example, when a blob is uploaded to the storage account, the Azure Storage service publishes a blob created event to the system topic in Event Grid, which then forwards the event to topic's subscribers that receive and process the event.

Reference: System topics in Azure Event Grid

Question: 452

Measured Skill: Design infrastructure solutions (25-30%)

You have an Azure subscription that contains a virtual network named VNet1. VNet1 contains three virtual machines that host a web app named App1. The virtual machines are assigned only private IP addresses.

You need to recommend a solution to evenly distribute the traffic for App1 across the virtual machines. The solution must meet the following requirements:

- Ensure that App1 is accessible only from VNet1.

- Minimize administrative effort.

What should you include in the recommendation?| A | Azure Front Door |

| B | Azure Traffic Manager |

| C | Azure Firewall |

| D | Azure Application Gateway |

Correct answer: DExplanation:

Azure Application Gateway is a web traffic load balancer that helps you manage traffic to your web applications. Unlike traditional load balancers that route traffic based on IP address and port, Application Gateway makes intelligent routing decisions based on HTTP request attributes like URL paths and host headers.

For example, you can route requests with /images in the URL to servers optimized for images, while routing /video requests to servers optimized for video content. This application layer routing gives you more control over how traffic flows to your applications.

Application Gateway operates at the application layer (OSI layer 7) and provides features like SSL/TLS termination, autoscaling, zone redundancy, and integration with Web Application Firewall for security.

Azure Application Gateway can be deployed inside VNet1 and uses private frontend IP only, so App1 is accessible only from within VNet1.

References:

What is Azure Application Gateway?

Application Gateway infrastructure configuration

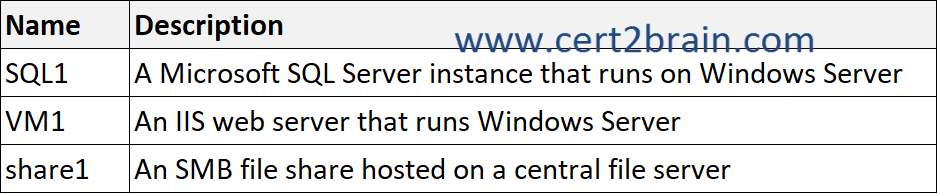

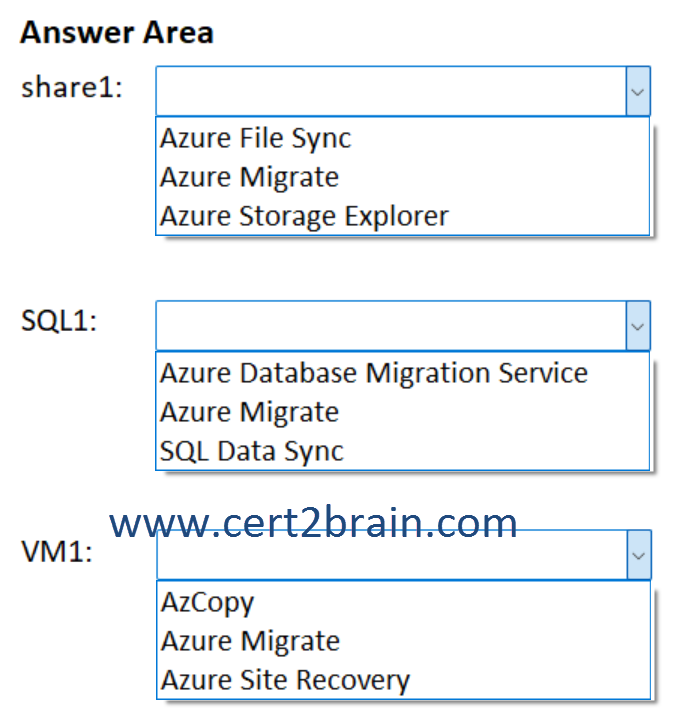

Question: 453

Measured Skill: Design infrastructure solutions (25-30%)

You have an on-premises app named App1 that uses the Hyper-V virtual machines shown in the following table.

You plan to migrate App1 to Azure.

You need to recommend which services to use for the migrations. The solution must meet the following requirements:

- share1 must be migrated to a premium Azure Files share.

- SQL1 must be migrated to an Azure SQL managed instance.

- The migrations must be completed with minimal downtime and zero data loss.

- VM1 must be migrated to an Azure virtual machine. A sizing assessment must be completed before the migration.

What should you recommend for each virtual machine?

(To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.)

| A | share1: Azure File Sync

SQL1: SQL Data Sync

VM1: AzCopy |

| B | share1: Azure File Sync

SQL1: Azure Database Migration Service

VM1: Azure Migrate |

| C | share1: Azure Migrate

SQL1: Azure Migrate

VM1: Azure Site Recovery |

| D | share1: Azure Migrate

SQL1: Azure Database Migration Service

VM1: Azure Site Recovery |

| E | share1: Azure Storage Explorer

SQL1: SQL Data Sync

VM1: AzCopy |

| F | share1: Azure Storage Explorer

SQL1: Azure Migrate

VM1: Azure Migrate |

Correct answer: BExplanation:

To migrate share1 to a premium Azure Files share, we should use Azure File Sync. Azure File Sync enables seamless migration of your on-premises file data to Azure Files. By syncing your existing file servers with Azure Files in the background, you can move data without disrupting users or changing access patterns. Your file structure and permissions remain intact, and applications continue to operate as expected.

To migrate SQL1 to an Azure SQL managed instance, we should use the Azure Database Migration Service. Azure Database Migration Service is a fully managed service designed to enable seamless migrations from multiple database sources to Azure data platforms with minimal downtime (online migrations).

To migrate VM1 to an Azure Virtual Machine, we should use Azure Migrate. Azure Migrate is a service that helps you decide on, plan, and execute your migration to Azure. Azure Migrate provides support for servers, databases, web apps, virtual desktops, and large-scale offline migration by using Azure Data Box. The Azure Migrate: Discovery and assessment tool can assess on-premises servers in VMware and Hyper-V environments, and physical servers to estimate the right size of Azure virtual machines (VMs).

References:

What is Azure File Sync?

What is Azure Database Migration Service?

What is Azure Migrate?